It may not be acknowledged or realised but there is already a hankering that does not dare speak its name to hand over decision-making including in government to robots.

We ask the impossible of politicians – tantamount on occasion to a sort of saintliness. If we see any degree of what on the highest principles we can regard as backsliding we are quick to cast stones at them from our glass houses. We may not say it in so many words. We expect them to be blemish-free of moral culpability, granted foresight of all contingencies even, it may be said, endowed with a super-power brain so as to e able to absorb a vast welter of information – we go on to deduce the solution in a given context that we personally see is the right one and condemn them for having got it wrong. We give little allowance for their honesty about their views and excoriate them when their lack of transparency may be explained by their simply realising the consequences at the ballot box of their being honest. We want someone to hate as well as someone to admire. It is all a tall order.

It is difficult to hate a robot and easy to admire one. The mix needed includes respect and that becomes difficult in these times when everyman feels as good as those ‘set above him’ and can give to politicos a piece of their minds with the utmost stridency. It is hard to tax a robot with prejudices. Perhaps what we fear without acknowledging it is that we may need some ruler to fear…

Granted, all this presupposes a degree of intelligence and understanding on the part of A.I. that is not available at the present time.

Africans (unless in Somaliland) usually do not want imperial colonists back in power. They prefer to be ruled by one of their own ilk. The dire results (for now) are plain to see. It seems an endemic human condition. The Chinese resented the attack on their Emperor’s rule by the British – but it is not often admitted in this context that mandarins treated their lower orders like dirt and forbade trade advantageous to the populace with technically more advanced societies. Leaving aside the question of opium – a blot undoubtedly by our standards – a case could be made that the invaders of Albion had more in common with the present-day Chinese communists than their homegrown Chinese rulers in a City Forbidden to the people. We gravitate to look-alikes. Perhaps it is an all-too-human tendency to be reviewed?

Artificial Intelligence tools are already used for personality tests. Personnel managers assess CVs for job applicants using A.I. for data, with algorithms of the words optimised. Cognitive search, and indexing automatically, is less likely to fall short of the ideal of accuracy than a more fallible human thinking.

A chatbot, an automated program that interacts with customers like a human, is not an exorbitant cost. Chatbots attend to customers at all times of the day and week and are not limited by time or a physical location. This makes its implementation appealing to a lot of businesses that may not have the manpower or financial resources to keep employees working around the clock. Companies mainly in America have answering services ‘manned’ by robots whose voices mimic human speech inflexion and whose answers cover wide range of questions put to them. People may not acknowledge it but their positive reaction to pleasant ways of speaking is ingrained. We warm to helpful greetings and instruction and these often can be found in programmed responses on a computer voice-link and compares favourably to gruff unhelpfulness from a human. Ah yes, but…people jib at the idea of handing over authority to anyone not of their own ilk. Is this always sensible?

In terms of Value-systems, why not have all the parameters, all the considerations, fed into computers (when sufficiently advanced) and then let this form of human-originated ‘Big Brother’ dispense rulings, free of special pleading, prejudice or influence?

The quantum leap in terms of the capacity of A.I. to participate at the helm of affairs is one of degree and not one of kind. When it comes to philosophy or moral codes, no one to date has attempted to feed into a super-power computer all the information that is available on the internet, all the scriptures, all the works on philosophy, and so forth. This would seem a mammoth task. It is surely not beyond the wit or determination of people to input this welter of information into A.I. machines however much it may be conceded that this would be anything but a quick fix. The criteria would need very careful consideration by experts in a number of fields but the information itself probably already exists in the ether on the internet. It would need to be a long-term project, manned and staffed by all manner of experts, Talking Heads, pundits and so forth.

Why is allowing our moral and societal codes be judged by advanced A.I. considered by many to be such a No-No?

Consider the situation as it exists today and always existed – with easily assessed consequences in terms of the Black Record of history. People who make the decisions that impact on all of us rarely have the time to reflect on the ramifications of what they do. A thousand pressures are on them from back-room stabbers, balancing acts that have to be struck to personal greed and so forth. The fine difference often between taking one decision, or another on the basis of evidence available at the time that is but rarely all-encompassing, and the prejudices and the agendas personal and public that are faced in the cockpits and the heat of kitchens where the decisions are taken is not considered as a question with which to grapple by those round the country in armchairs or saloons where they all but impotently propound policy. The fact that power brokers are on television somehow makes of them become familiar figures with whom we can easily imagine round our own tables paradoxically somehow denudes them of the fact that they are but human like the rest of us.

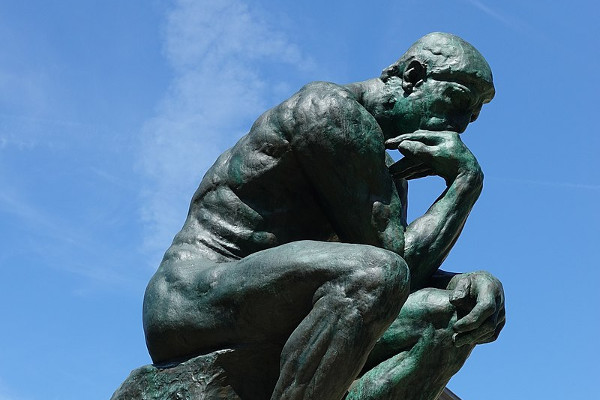

In a sense even human beings are a form of quantum physical machines. The image we see is based on collapsing photons; the way in which our bodies and minds work in many ways is comparable to A.I. machines.

A tom-tom or GPS system for navigation individually answers any number of queries about different routes posed at the same time from any number of different drivers.

Could an Artificial Intelligence machine into which all relevant philosophies are inputted have something to teach us?

We may benefit from a little help to straighten out our ideas and values.

A Value-system may be exclusively human in origin but why assume that it cannot be computable for accurate and objective truths by which to live our lives, drawing from a much wider wellspring of evidence than any philosopher or opinion-arbiter can possibly master?

Science fiction is often the harbinger of reality. Remember HAL, the computer in the spaceship of the film 2001? HAL responded in a human-sounding voice to all manner of dilemmas faced by the astronauts aboard ‘R2D2’ in ‘Dr Who’ had like features. What is the situations that we face without the help of a superpower brain? It would be comforting if we could put our trust in an impartial and rational decision-adviser.

A judge cannot adjudicate between disputants drawing conclusions from the distilled wisdom of the ages nor is he able to compute every shred of evidence that has ever had relevance, and this includes computer-agreed principles and all precedents. Is a robot likely to be open to bribery or prejudices? No matter how much the wish to find a compromise between differing ideas, the solution in any given conundrum advanced by fallible human beings can fall short of the ideal. It can be more open to a charge of not being entirely objective than of a computer programme set up in advance of any particular issue coming before it for a ruling. The principle of one person cutting for a hand of cards, and the opponent choosing, is admitted as being fair; in A.I. as here projected, can be seen an extension of this principle. Must we be ruled by ‘one of our own’, even if worse in our genuine interests, in practice, than our more human rulers may have already proved to be?

It could be a useful exercise as well as a stimulating mental challenge if consideration ahead of time might be given to assessing the possibilities of decisions, ethical, juristic or political, being put to a super-computer.

In the Pentagon all manner of armchair warriors spend time thinking through a range of crises that may never happen and planning on how to deal with them in strategic papers that we may pray never merit the light of day. The amount of time taken in producing these contingency plans hardly bears thinking of.

This does not need to be a case of All or Nothing.

Not only is the jury still out on all the immense possibilities of A.I. being perhaps one ‘counsellor’ among many ‘sitting’ on a panel of judges. What of all the ethical questions that faiths or political systems at the moment claim to be dispensers of the talismanic truths? No serious jury or impressive Talking Heads shows sign of being summoned to get their heads around all the ethical questions that can arise and which need the adjudication of men. Why not at least get this ball rolling?

Come the day when there is any possibility of such a question arising, are we to leave this issue largely to scientists, as has happened in cases of biology and anatomy over which activists clash in a sort of Wild West of conflicting ideas.

It is not just the sum total of all philosophies and history that the super-power computer will need to input, infinitely beyond the capacity of any man let alone a committee, it is an understanding of the drives of humans, their emotions. This needs knowledge not empathy. In Klara and the Sun by Kazuo Ishiguro the robotic shop window dresser tabulates all human reactions dispassionately; “You notice everything!”, says her boss.

We cannot access the ramifications in the future of every last sensible decision we make but we could inch closer to this ideal of making the correct decisions. How to identify the apocryphal tiny beat of an insect’s particular wing in the Sahara so as to obviate the resultant typhoon in Kowloon may be not be feasible yet who knows if one day a highly developed sensory computer could do even such a job. The awareness of this as a respectable quest may help us in the present to work towards identifying permutations of consequences of decisions to the Nth degree.

This prospect of AI government or law dispensing is not just about facts and writings but the emotional freight with which almost every sentence is loaded. When and if the day of this becoming feasible reality dawns, ethics and emotion needs consideration, each word perhaps needing a symposium to ascertain its justified weight in the scales of consensual thinking. The putative rulings in such a situation may not be 99% perfect every time but a 98% possibility of success is vastly better than 97%. That is to be hugely optimistic about exclusively human thinking after the hash that has been made of government down the ages and the host of dubious ideas on which so often it has been based. What may hang on the right decisions in contexts as yet unforeseen when the fates of millions may hang in the balance? Whose shoulders are broad enough to bear such an oppressive burden and will command sufficient respect? Are we to hope against hope that, come the day of some immense challenge facing mankind, a man will arise who is up to the job of disinterestedly and successfully shouldering the responsibility?

Does an Orwellian dystopia have to be the only outcome of Big Brother? Is E. M. Forster’s The Machine Stops the only and valid critique of H. H. Wells’ sci-fi book with the telling title: A Modern Utopia?

The immensity of the task that may lie ahead may be as daunting and require as much planning for future generations as the building of the great Wall of China but almost all that is lacking to start the process of weighing up the pros and cons would seem to be sufficient courage.

Cometh the hour, cometh the Machine….!